Automatic Derivations Part 1¶

I recently talked about my vision of a different kind of computer algebra tool. Like symypy but more transparent. It would tell you what it tried and what failed; for example, during a symbolic integration procedure. It could also help you work out derivations. Take the simple example below. I find that, with such things, the answer either jumps right out at you (suggesting a pattern matching procedure in the brain) or requires some trial and error constrained by applicable rules to reverse engineer the in-between steps. Today, I had time to try my hand at automating the latter.

$$-\frac{1 + \ln{2\pi{{\sigma_{1}}}^{2}}}{2} + \frac{\ln{2\pi{{\sigma_{2}}}^{2}}}{2} \Longrightarrow -\frac{1}{2} + \ln{\frac{{\sigma_{2}}}{{\sigma_{1}}}}$$

In the code below, the algorithm searches for a combination of steps that will take the initial expression to the target. Exploration is also biased towards string renderings that are most similar to our target.

let transformExpr targetexpr sourceexpr =

let targetstr = Expression.toPlainString targetexpr

let containsLog = Structure.filterRecursive exprContainsLog sourceexpr

let options' = //remove operations that are un-needed

List.filter

(fun (_,str: string) -> not(str.Contains "logarithm") || containsLog)

options

let rec loop path currentexpr =

cont {

let! chosenOp,desc = uniform options'

do! constrain (List.isEmpty path || List.head path <> desc)

let expr' = chosenOp currentexpr

if expr' = targetexpr then return List.rev (desc::path)

else

do! constrain(currentexpr <> expr')

let str' = Expression.toPlainString expr'

//bias search towards directions that are more like our target

let reward = stringSimilarityDice targetstr str'

let! p = uniform_float 20

do! constrain(reward > p)

return! loop (desc::path) expr'

}

loop [] sourceexpr

The search algorithm returns a set of strings which capture the instructions to be followed. At each stage, expressions are transformed closer to our target. This next section of code takes that list of strings and looks up the corresponding transformation, writing out the latex that describes the operation and its annotation.

let runProgram (e:Expression) ops =

let start = sprintf "\\begin{align*} \\hline \n %s \\tag{Initial Expression}" (e.ToFormattedString())

let rec loop s expr = function

| [] -> s

| op::ops' ->

let expr' = lookup.[op] expr

let str' = sprintf " \n\\\\%s \\tag{%s}" (expr'.ToFormattedString()) op

loop (s + str') expr' ops'

loop start e ops + "\n \\end{align*} "

With that, the setup is done and we can search/sample the model. Using a relatively low max depth and asking for 50 samples, it usually takes ~60-100 ms and returns 3-7 derivations. Note that in a final package or library, front ends that abstract the below would be written. As can be seen, embedding these searches in a probabilistic programming framework allows us to propagate probabilities and gives us a notion of elegance in terms of how many steps a derivation takes. The $-log(p(\mathord{\cdot} ))$ of this model can also be seen as corresponding to distance traveled, where, less likely paths have higher values.

let searcher = Model(transformExpr target source)

searcher.ImportanceSample(nsamples = 50,maxdepth = 8)

|> Utils.normalize

|> List.sortByDescending fst

|> ProbVal.map(runProgram source)

|> toLatexRows

|> String.concat " "

|> Util.Latex

Working with Equalities¶

In the above, steps were taken by applying known transformations and rules. Working with a knowledge base will require working with derived quantities and performing substitutions. The next section covers a simple example of this: deriving bayes rule. The following code accepts a given equation and finds all rearrangements of all terms.

let deriveTrivialEqualitiesSingle(e1,eq) =

[yield Equation(e1,eq)

for var in findVariablesOfExpression eq do

match reArrangeEquation0 true var (eq,e1) with

| Identifier _ as var,req -> yield Equation(var,req)

| _ -> ()]

let deriveTrivialEqualities(eqs: Equation list) =

let deqs =

[for eq in eqs do

yield! deriveTrivialEqualitiesSingle eq.Equalities.Head]

Hashset deqs |> Seq.toList

let genEqualitiesList(eqs: Equation list) =

[for e in eqs do

yield! e.Equalities]

let ``P(A|B)`` = symbol "P(A|B)"

let ``P(A ∩ B)`` = symbol "P(A ∩ B)"

let ``P(B)`` = symbol "P(B)"

let ``P(A)`` = symbol "P(A)"

let ``P(B|A)`` = symbol "P(B|A)"

let equalities =

deriveTrivialEqualities [``P(A|B)`` </ equals /> (``P(A ∩ B)`` / ``P(B)``)

``P(B|A)`` </ equals /> (``P(A ∩ B)`` / ``P(A)``)]

let generatedEqualities = genEqualitiesList equalities

equalities

These automatically derived equalities can then be substituted with/for during search. The path between expressions is found by looking for an applicable substitution, applying it and then checking if the termination condition is met.

let findPathUsingEqualities terminationCondition equalities (seen: Hashset<_>)

startExpression targetExpression =

let rec search path currentExpression =

cont {

if terminationCondition targetExpression currentExpression then

return (List.rev path)

else

let applicable =

List.filter

(fst >> flip containsExpression currentExpression)

equalities

match applicable with

| [] -> return! fail()

| _ ->

let! e1,e2 = uniform applicable

let expressionNew =

replaceExpression e2 e1 currentExpression

do! constrain

(not

(seen.Contains

(Rational.rationalize expressionNew)))

let msg =

sprintf

@" = %s \;\;\; \left( \textrm{because} \; %s = %s\right)"

(expressionNew.ToFormattedString())

(e1.ToFormattedString()) (e2.ToFormattedString())

seen.Add expressionNew |> ignore

return! search (msg :: path) expressionNew

}

let start = sprintf "%s"

(Expression.toFormattedString startExpression)

search [start] startExpression

let model seen = Model(findPathUsingEqualities containsExpression generatedEqualities seen ``P(A|B)`` ``P(B|A)``)

(model(Hashset())).Reify()

|> Utils.normalize

|> ProbVal.map (fun s -> sprintf "$$ %s $$" (String.concat " \n \\\\ " s))

|> toLatexRows

|> String.concat " "

|> Util.Latex

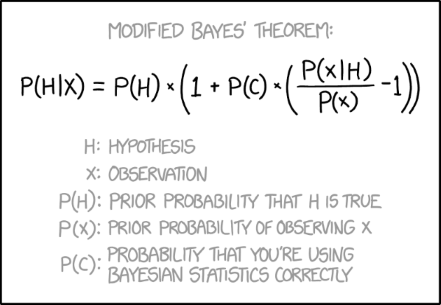

Because $P(A|B) \neq P(B|A)$, we need to be careful with our termination criterion. Here, I use the requirement that $P(B|A)$ exists in the final expression. Without this (for example, requiring equality), the algorithm will terminate with no result. With it, the algorithm quickly produces the result. Indeed, the space is highly constrained, not needing much search and can be exactly computed.

Given just two definitions, of $P(A|B)$ and $P(B|A)$, the procedure is able to derive the solution, which just happens to be Bayes Theorem. I provided only one of the used facts to arrive at this result. Nothing in the algorithm is specialized to getting this result. A goal for this project is to input much of what might be found in cheat sheets on calculus, linear algebra, analysis, probability etc. and then have the software explore and suggest with that knowledge base.

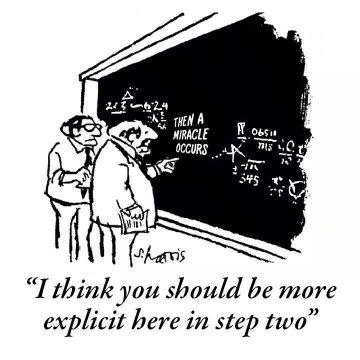

Another use would be to fill in gaps that authors currently cannot be bothered to. Often, calculations and derivations are separately done by the authors, foisting only their complex outputs on the reader. Software that could fill in gaps and annotate when an author has a particular approach in mind (perhaps collapsible in published form), would (hopefully) increase the number of readers able to double check claimed results as well as reduce the chances of small mistakes.

A more Complex Example¶

The final example extends the previous function with the ability to alternate between substitution and performing either a multiply or divide. With this, multiple interesting (and some ridiculous) paths from $P(A|B)$ to $P(B|A)$ are quickly found.

let findPath equalities (seen: Hashset<_>) startExpression targetExpression =

let rec search path currentExpression =

cont {

seen.Add startExpression |> ignore

if targetExpression = currentExpression then

return List.rev

(Expression.toFormattedString currentExpression

:: path)

else

let! replace = bernoulli 0.5

if replace then

let applicable =

List.filter

(fst >> flip containsExpression currentExpression)

equalities

match applicable with

| [] -> return! fail()

| _ ->

let! e1,e2 = uniform applicable

let expressionNew =

replaceExpression e2 e1 currentExpression

do! constrain

(not

(seen.Contains

(Rational.rationalize expressionNew)))

let msg =

sprintf

@" = %s \; \left( \textrm{because} \; %s = %s\right)"

(expressionNew.ToFormattedString())

(e1.ToFormattedString())

(e2.ToFormattedString())

seen.Add expressionNew |> ignore

return! search (msg :: path) expressionNew

else

let variables = findVariablesOfExpression currentExpression

let! desc,op = uniform

((List.map

(fun (x: Expression) ->

("*" + (x.ToFormattedString())),

( * ) x) variables)

@ (List.map

(fun (x: Expression) ->

("/" + (x.ToFormattedString())),

flip (/) x) variables))

let nextExpression = op currentExpression

do! constrain

(not

(seen.Contains

(Rational.rationalize nextExpression)))

let msg =

sprintf @"%s = %s %s"

(nextExpression.ToFormattedString())

(currentExpression.ToFormattedString()) desc

return! search (msg :: path) nextExpression

}

search [Expression.toFormattedString startExpression] startExpression

let model2 seen = Model(findPath generatedEqualities seen ``P(A|B)`` ``P(B|A)``)

(model2(Hashset())).ImportanceSample(2000,15)

|> List.sortByDescending fst

|> Utils.normalize

|> ProbVal.map (fun s -> sprintf "$$ %s $$" (String.concat " \n \\\\ " s))

|> toLatexRows

|> String.concat " "

|> Util.Latex

Related Work¶

The idea of this article was to explore the concept of a different approach to computer algebra—one that amplifies an individual's ability to both produce and consume mathematics. This is done by having methods that allow the manipulation of expressions at a more direct level instead of blackbox methods that do a lot at once. Additionally, methods that aid with derivations and are transparent in their steps are explored.

There are other works that can be said to be similar in theme:

Mathematica¶

I would be remiss to not mention Mathematica. It has an incredible amount of functionality, and with Wolfram Alpha, is unmatched as a scientific notebook tool. Unfortunately, it is quite expensive and inaccessible to many. This work is distinguished from it by having a bit more emphasis on exploring math through non deterministic search tools.

Konrad Hinsen¶

Konrad Hinsen has done a lot of interesting work in and written a lot about the mutually reinforcing aspects of math and programming. His work is focused on languages that aid in the communication and reproduceability of scientific work.

Lean¶

This project is a bit different, being a verifier and also operating on full proofs but nonetheless shares a similar motivation of wanting to use computers to improve the pedagogy and doing of mathematics.

SIN and SAINT¶

In the 1960s Moses and Slagle worked on symbolic integrators patterned on how a human might do integration. It is mind boggling to me that they managed to do all that back then on such limited compute. This is to me more impressive than playing ATARI arcade games from pixels. Today, the main difference I suppose, is what would have taken them weeks or months might now take me hours or days using much fewer lines of task specific instruction. Nonetheless (like SHRDLU or Eliza), it's completely astounding what they managed to achieve with so little.

Summary and Next¶

In this, I have used small examples to explore a different branch off the usual approach to computer algebra. In particular, one focused on communication, amplifying a user's ability to consume or produce mathematical artifacts and learning.

In the short term, I aim to get transparent integration at least as good as a math grad student. I currently see no barrier to this. Medium term after that will be to derive the relative entropy of two normal distributions.

Further on will be using knowledge bases to derive things in Vector calculus, variational calculus, information geometry and even category theory. I am more uncertain here but results are promising.

In essence, the goal is to have a quite capable math assistant which augments your ability to quickly apply new math knowledge. The idea here is that, though unused math quickly decays, if put in this system, it will maintain indefinitely, ready to be reused even if you no longer remember. Or even better, it could jump start and accelerate your own recall. I also envision using it to check derivations in math heavy papers where, even if you can follow, it's often too much of a bother.

In general, I am operating under the assumption of, if a human can follow the derivation, then the computation should be completeable in a timely manner. I also see high pedagogical utility.

In the next post I'll look at combining the above two approaches into a single one but unlike these, it will be an open ended search where the goal is to simplify the input expression:

I'll also be looking at filling in steps of some quite simple proofs.

Conclusion¶

With machine learning you can write something that can categorize images better than most humans. But you can also do useful things with symbolic AI: write things that can do math better than most humans.

While Deep Learning is well suited to image processing, sound somewhat and natural language passably (consider the scope and not limited and quite meaningless metrics), symbolic approaches do well in tasks that correspond to high level human reasoning. Here, I have focused on mathematics but similar can be seen with a human like approach to learning from limited data.

2018-11-25